The antidote to copy-and-pasting code

So you've decided to learn Unity because you want to build some awesome augmented reality (AR) apps.

And you've been told that AR Foundation is the way to go if you're using Unity.

Problem is, the documentation isn't super helpful for a newbie:

And if you go through a tutorial, or play with arfoundation-samples, you might feel like you're copy-and-pasting code – without truly understanding how it works.

Maybe you've been searching for that perfect online course on Udemy or Coursera. If only there were a solid, up-to-date course that'd give you a firm grasp of the basics, you'd be set!

If that describes you, then this blog post is for you.

And in the rest of this post, I discuss the underlying mental models you should grasp before doing any AR Foundation coding, so that you fully understand what you're doing when writing AR code.

(The post assumes that you know how to program, and that you know your way around Unity. If you don't, then it may be worth checking out a Unity course on Udemy before learning AR Foundation.)1. Everybody get up, it's time to SLAM now 🏀

At its core, AR is an illusion.

By overlaying virtual objects on top of your smartphone's camera feed, we make it seem like the virtual content is part of the real world:

But how do we know where to place the virtual objects?

This is done through a technique known as Simultaneous Localization And Mapping – SLAM, for short.

(You may also hear "concurrent odometry and mapping", or "markerless tracking". These terms of art describe the technique differently, but refer to the same technique.)

SLAM is basically this: if you lost your memory and woke up in a new room (so... you're a robot 😛), you would look around you to get a sense of where you are.

By seeing a wall 2 meters in front of you, and maybe a bedside table half a meter to your right, you'd know that if you move, you'll be moving relative to this starting place.

Your phone does the same thing. It detects feature points – visually distinct points of interest – in the camera feed, and uses the change of position of these points (along with sensor data) to estimate the phone's position and orientation relative to the world over time:

With this computational understanding, your phone can do all sorts of things:

- Planes. It can guess that a cluster of feature points is a wall or tabletop.

- Raycasting. It can check whether your phone is pointed at a plane, and it can use that info for user interaction.

- Anchors. It can place virtual objects on planes.

- Multiplayer. It can synchronize feature points with other phones for multiplayer experiences.

And that's just the tip of the iceberg: AR is a whole grab-bag of techniques. But SLAM is at the core.

2. Understanding AR Foundation

Sounds great... in theory, right?

Well, not quite. You still gotta build everything we described.

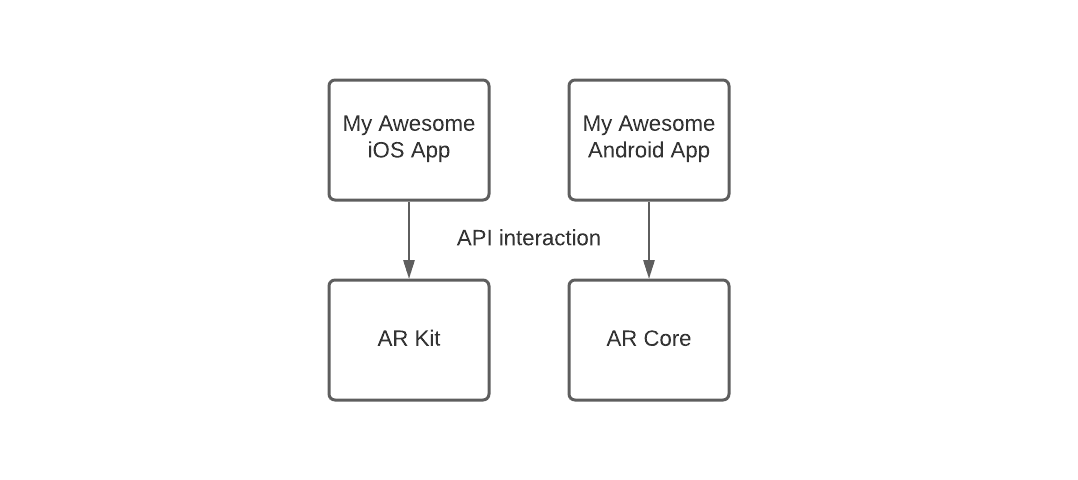

Luckily for us, the brilliant computer vision engineers at Apple and Google have written the code to do SLAM (and other techniques) for us. And they've exposed that functionality as APIs in AR Kit and AR Core:

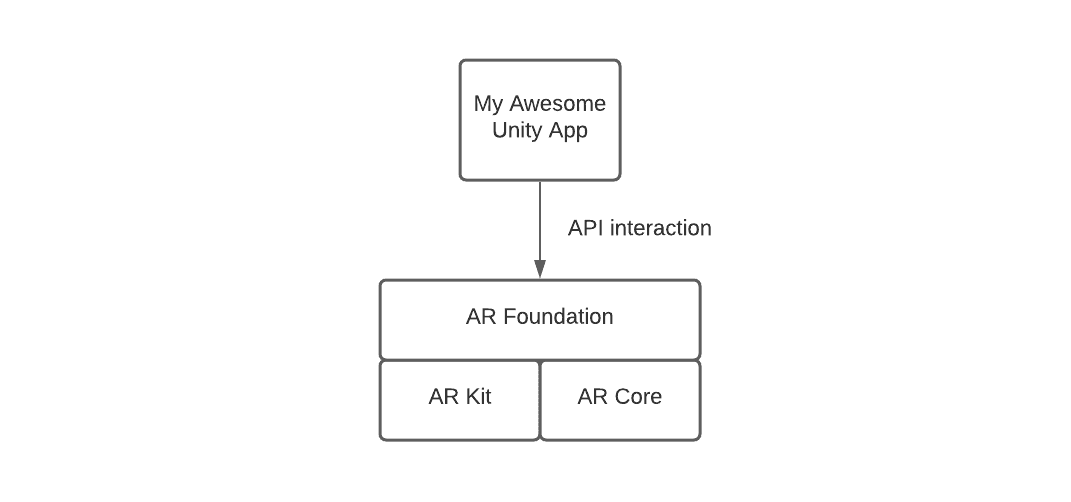

The engineers at Unity take that generosity one step further. They basically say: "hey Apple and Google, both your APIs do the same thing, so we're going to write a wrapper layer that abstracts away both your APIs."

The end result? You have one, clean interface to learn – that of AR Foundation:

And that's where we come in. We want to learn AR Foundation's interface, so that we can focus on building the awesome AR experiences on top.

Let specialist computer vision engineers build the Lego bricks; we build the awesome Lego models.

3. Test your understanding

To test your understanding of the concepts above, I present a simple exercise.

Go through the list of features exposed by AR Foundation again:

And for each feature, write 1 sentence about how you think it relates to other features. For example:

* Device tracking - Implements SLAM, allows us to know where device is relative to start.

* Point clouds - This is a collection of feature points.

* Plane detection - Detects planes given point cloud of feature points.

* Anchor - ...

* Light estimation - ...

* ...

There are no right answers. The whole point is to start building your mental model of how AR works.

As you learn more, you'll revise your mental model. But having any mental model allows you to approach an AR Foundation tutorial with firm understanding.

For your convenience, you can take notes in the text inputs below:

| Concept | Notes |

|---|---|

| device tracking | |

| plane detection | |

| point clouds | |

| anchor | |

| light estimation | |

| environment probe | |

| face tracking | |

| 2D image tracking | |

| 3D object tracking | |

| meshing | |

| body tracking | |

| collaborative participants | |

| human segmentation | |

| raycast | |

| pass-through video | |

| session management | |

| occlusion |